Welcome to my homepage!

Here are some quick facts about me:

Here are some quick facts about me:

🦾 My research interests are in robotics spanning reasoning, policy learning, perception, and generative approaches.

🧑🎓 I recently graduated from the University of Houston with a B.S. in Computer Science and Biomedical Engineering.

🧑💻 I am a Research Intern at the University of Washington and full-time Engineer at Microsoft.

Overview

I am a Vietnamese-American born and raised in Houston, TX. I moved to Seattle after graduation to conduct my predoctoral research at the University of Washington in the Robotics and State Estimation Lab and Personal Robotics Lab advised by Dieter Fox (Founding Robotics Director at Ai2 and NVIDIA) and Siddhartha Srinivasa (Founding Robotics Director at Amazon Robotics AI, Partner at Madrona Ventures). My research investigates robot manipulation from the perspective of building action policies and robot learning systems centered on rich and diverse data representations for embodied reasoning such as task generalization and long-horizon planning. As a prospective doctoral student, I am deeply grateful to be supported by the National Science Foundation CISE Graduate Fellowship!

Outside of research, I am a software engineer at Microsoft building Copilot into OneNote.

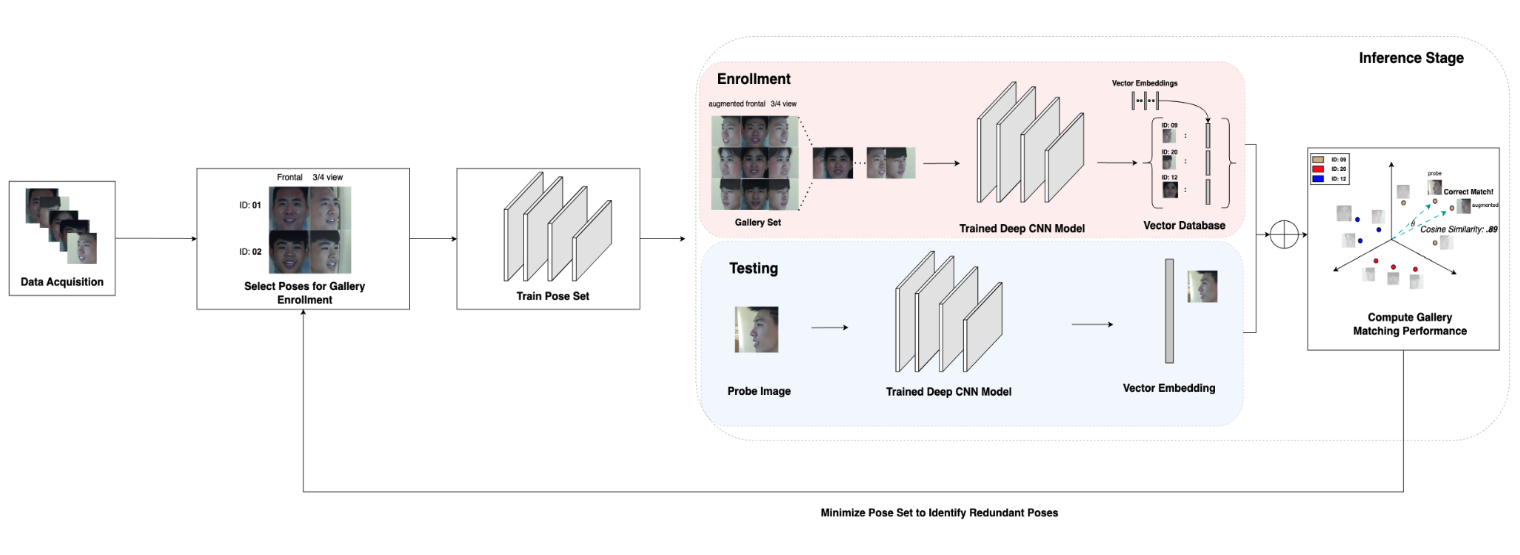

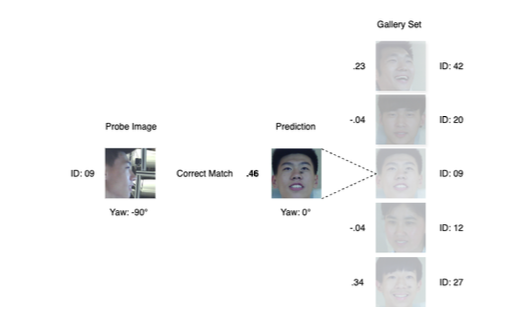

Prior, I studied Computer Science and Biomedical Engineering focusing on Machine Learning and Neural Engineering throughout my bachelors at the University of Houston. During my bachelors, I spent four years working on a range of research topics (brain-machine interfaces, computer vision, and robotics). Notably, I spent over two years with Dr. Shishir Shah (Chief AI Officer at OU) developing pose-invariant methods for face recognition models (Bachelors Thesis, VISAPP 2025).

For work experience, I have spent my past three summers interning at Microsoft, Amazon Web Services, and Northrop Grumman where I focused on engineering machine learning platforms and applying models for practical enterprise applications.

I always enjoy chatting so feel free to reach out at carterung [at] gmail [dot] com

News

August 2025 Selected as a 2025 National Science Foundation Computer and Information Science and Engineering Graduate Fellow!

July 2025 Released RoboEval – exploring granular evaluation for bimanual manipulation

February 2025 Published at the 20th International Conference on Computer Vision Theory and Applications (VISAPP) 2025 as first-author.

📝 Publications

| RoboEval: Where Robotic Manipulation Meets Structured and Scalable Evaluation Yi Ru Wang, Carter Ung, Christopher Tan, Grant Tannert, Jiafei Duan, Josephine Li, Amy Le, Rishabh Oswal, Markus Grotz, Wilbert Pumacay, Yuquan Deng, Ranjay Krishna, Dieter Fox, Siddhartha Srinivasa arXiv 2025 (Under Review) paper / project page / code RoboEval introduces a unified evaluation suite covering bimanual manipulation tasks with fine-grained metrics so researchers can compare models beyond binary success/failure. |

| Minimizing Number of Poses for Pose-Invariant Face Recognition Carter Ung, Pranav Mantini, Shishir Shah International Conference on Computer Vision Theory and Applications (VISAPP) 2025 paper We study how many viewpoint images are truly needed for robust, pose-invariant face recognition and show that a compact pose set dramatically reduces data-collection overhead. |

| Dual-VXM: A Prior-Aware Dual-Encoder UNet for Watch-and-Wait Monitoring with Low-Field MRI Mohammad Javadi, Panagiotis Tsiamyrtzis, Carter Ung, Ernst Leiss, Andrew Webb, Nikolaos Tsekos Under Review We present a novel dual-encoder UNet architecture that leverages prior high-field MRI scans to enhance low-field MRI image quality for improved monitoring of Low-Grade Gliomas (LGG). |

| Minimizing the Number of Poses for Pose-Invariant Face Recognition Carter Ung Undergraduate Dissertation, University of Houston 2024 paper My honors thesis extends the VISAPP study and details the full experimental pipeline for low-redundancy face-data acquisition. |